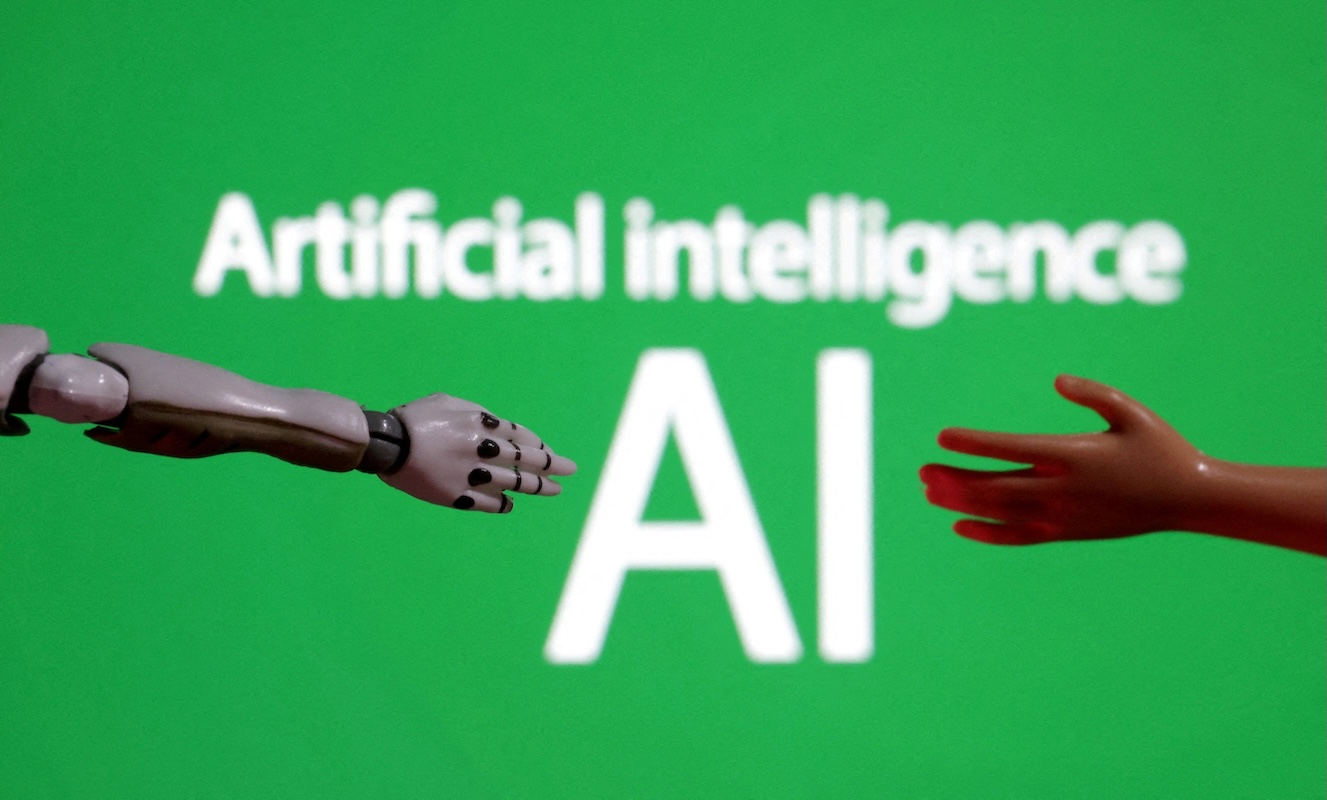

Trump’s order and Pope Leo’s vision for regulating AI: Can they converge?

(OSV News) — Since the explosion of generative artificial intelligence began in the 2020s — with its ability to generate human-like text, realistic images, and convincing film — users and developers of AI have warned consistent regulatory guardrails are necessary to protect against documented harms, an issue of particular concern to Pope Leo XIV.

On Dec. 11, 2025, President Donald Trump signed an executive order bearing the promising title, “Ensuring a National Policy Framework for Artificial Intelligence.” Yet Trump’s executive order, which calls for federal regulation to supersede state regulation, specifically declares “AI companies must be free to innovate without cumbersome regulation.”

OSV News asked Taylor Black, founding director of the Leonum Institute for AI & Emerging Technology at The Catholic University of America and director of AI & Venture Ecosystems at Microsoft Corporation, to share his thoughts about how, or whether, these two aims can be reconciled and what the Catholic faith has to say on the ethical development of AI.

Pros and cons of regulating AI

OSV News: What are the potential impacts of this executive order, both positive and negative?

Taylor Black: The executive order raises a question we’ve been wrestling with since the earliest days of AI governance: What is the proper locus of regulatory authority over technologies that are, by their nature, borderless?

There are legitimate arguments on both sides here.

A unified national framework could provide the clarity and consistency that responsible developers — particularly smaller firms and startups — genuinely need. The current patchwork of state laws does create compliance challenges, and there’s a real risk that well-intentioned, but technically uninformed, regulations could inadvertently stifle beneficial innovation. This is not an abstract concern. I’ve seen it firsthand.

But we need to be honest about what we’re trading away. States have historically served as laboratories of democracy, and in the AI space, some of the most thoughtful regulatory efforts have emerged at the state level precisely because state legislators are often closer to the communities experiencing AI’s real-world effects. Colorado’s algorithmic discrimination law, which the (executive) order specifically criticizes, represents an attempt to address documented harms — harms that communities of color, low-income families, and other marginalized groups are experiencing right now, not hypothetically.

A Catholic view on AI regulation

The order positions “innovation” and “responsible oversight” as fundamentally in tension. But this is precisely the false dichotomy that Pope Leo XIV addressed in his message to the (Nov. 6-7, 2025, Rome) Builders AI Forum: “The question is not merely what AI can do, but who we are becoming through the technologies we build.” That framing matters. It shifts us from a purely utilitarian calculus to something more fundamental — a question about human identity and flourishing.

Catholic social teaching doesn’t ask us to choose between human flourishing and economic dynamism: It insists that authentic development must include both. As the Holy Father reminded the Forum participants, “Technological innovation can be a form of participation in the divine act of creation.” But precisely because of that creative participation, “it carries an ethical and spiritual weight, for every design choice expresses a vision of humanity.”

The question isn’t whether we regulate, but whether we regulate wisely, in ways that protect human dignity while allowing genuine creativity to flourish.

Tension of national law and local application, tech freedom and safety in regulating AI

OSV News: A major ethical concern is protection of children. The administration said the framework will take that into consideration. Are there any plausible issues if states aren’t able to engage in “local” regulation and enforcement?

Black: The administration has committed to ensuring that child safety protections remain intact, and Section 8(b)(i) explicitly exempts state laws relating to child safety from preemption. That’s important, and I take it seriously.

But here’s what keeps me up at night: enforcement capacity.

State attorneys general have been at the forefront of child protection efforts in the digital space. They understand their communities. They can move quickly. They’ve built relationships with local schools, parents, law enforcement, and advocacy organizations. A national framework, no matter how well-intentioned, cannot replicate this granular, relational capacity.

The exploitation of children online is not an abstract policy debate; it’s happening in real time, at scale, and the perpetrators are adapting faster than centralized regulatory bodies can respond. The platforms themselves have acknowledged — sometimes under legal pressure — that their own safety teams are overwhelmed.

This is not a matter where we can afford to experiment with jurisdictional reshuffling while we “wait and see” what a national framework looks like. The principle of subsidiarity — that matters should be handled by the smallest competent authority — suggests that states should retain meaningful enforcement capacity, not merely formal statutory language.

Any national framework must include robust funding for state-level enforcement, clear mechanisms for state attorneys general to act on child safety matters without federal preemption delays, and explicit sunset provisions that restore state authority if federal enforcement proves inadequate.

OSV News: “Big Tech” sometimes resists regulations as harmful to innovation. Yet the dangers of how AI is already exploiting humans are documented. What are your thoughts about how that can or will be balanced in a national framework policy, especially given concerns expressed by the Vatican and Pope Leo?

Black: The argument from industry — and I say this as someone who has spent years working within industry — is that regulation stifles innovation. There’s a version of this argument that’s correct: poorly designed regulation, written without technical understanding, can create perverse incentives and real costs without corresponding benefits.

But there’s also a version of this argument that’s simply a request for impunity. And we’ve seen where that leads.

The exploitation we documented at the (Nov. 14 Catholic University Law School “Corporate Social Responsibility of Big Tech”) conference — sexual extortion, forced labor, algorithmic discrimination — isn’t hypothetical. These are current harms, happening to real people, often facilitated by systems that were deployed with minimal oversight because “moving fast” was treated as an unqualified good.

What might balanced AI regulations include?

Here’s what I believe a balanced national framework must include:

First, transparency requirements that allow independent researchers, civil society, and affected communities to understand how systems work — without requiring the disclosure of genuinely proprietary technical details.

Second, meaningful accountability mechanisms that assign responsibility when AI systems cause harm. Blanket immunity benefits no one except bad actors.

Third, investment in formation, not just training. We need engineers, executives, and policymakers who have been formed in the moral traditions that can help them navigate these questions — not just trained in technical compliance.

Fourth, ongoing engagement with the communities most affected. The Rome Call for AI Ethics — signed by the Vatican, Microsoft, IBM, and others — calls for inclusion. That means the people being governed by these systems should have a voice in how they’re designed and deployed. It also means building the shared infrastructure that allows institutions, particularly Catholic institutions, to act intelligently and generatively rather than remaining passive recipients of technology built by others.

Fifth, explicit recognition that “innovation” divorced from ethical responsibility is not authentic development.

The Holy Father has been clear about what happens when we get this wrong. In his first interview as pope, he warned that “the danger is that the digital world will follow its own path and we will become pawns, or be brushed aside.” He noted that “extremely wealthy” people are investing in AI while “totally ignoring the value of human beings and humanity.”

Catholic social teaching: An ethical, human-first framework for approaching AI use and regulation

The Church’s vision is not Luddite resistance but something more radical: technology ordered to the integral development of the human person. And realizing that vision will require shared infrastructure — open, interoperable, governed by the communities it serves — not fragmented vendor stacks and ad hoc tech decisions. This is precisely the kind of federated, ecosystem-level thinking that must inform any national framework.

OSV News: Is there anything else you’d like to add?

Black: I don’t come to this question as an opponent of the technology industry or as a skeptic of innovation. I’ve spent my career building and investing in emerging technologies, because I believe in their potential for genuine human benefit.

But I also believe that Catholic social teaching offers us something the current debate often lacks: a framework that begins not with systems or markets, but with the human person, created in the image and likeness of God, endowed with a dignity that no algorithm can confer or remove.

The Church cannot content itself with critique from the sidelines. We must build — ventures, infrastructure, formation pathways — that embody the vision we articulate. Pope Leo XIV is right: This must be “a profoundly ecclesial endeavor.” And that endeavor requires institutional architecture, not just academic programs.

Pope Leo XIV concluded his message to the Builders AI Forum with this prayer: “May your collaboration bear fruit in an AI that reflects the Creator’s design: intelligent, relational and guided by love. May the Lord bless your efforts and make them a sign of hope for the whole human family.”

That vision — AI as a sign of hope for the whole human family — should be the standard against which we measure any national framework.

The question before us isn’t simply “How do we win the AI race?” It’s “What kind of society are we building, and who gets left behind?”

Kimberley Heatherington is an OSV News correspondent. She writes from Virginia.

The post Trump’s order and Pope Leo’s vision for regulating AI: Can they converge? first appeared on OSV News.